About NovelHopQA

NovelHopQA is a large-scale benchmark designed to test how language models handle multi-step reasoning over long passages from real novels. With 4,000 questions spanning 64k–128k-token excerpts and up to 4 reasoning hops, it reveals that even top models struggle as tasks get longer and more complex. NovelHopQA highlights key challenges in deep comprehension and multi-hop inference, providing a valuable tool for improving future language models.

Key Findings & Impact

Paper Overview

NovelHopQA is the first benchmark to jointly vary both hop depth (1-4 hops) and context length (64k-128k tokens) in natural narrative settings. Current large language models (LLMs) struggle to answer questions that span tens of thousands of tokens, especially when multi-hop reasoning is involved. While prior benchmarks explore long-context comprehension or multi-hop reasoning in isolation, none jointly vary context length and reasoning depth in natural narrative settings. When crucial evidence is buried in the middle of a long context, accuracy can plunge by more than 20 points (Liu et al., 2023). Even frontier models score below 50% exact match on multi-document suites, showing that larger context windows alone cannot solve cross-document reasoning.

Related Work

Multi-hop benchmarks fall into two groups:

- Multi-hop reasoning: WikiHop (Welbl et al., 2018) and HotpotQA (Yang et al., 2018) probe two-hop reasoning over short Wikipedia passages. These datasets catalyzed advances in multi-hop inference but restrict inputs to at most a few thousand tokens.

- Long context: NarrativeQA (Kociský et al., 2017), QuALITY (Pang et al., 2022), and NovelQA (Wang et al., 2024) embrace longer inputs but focus on single-hop or summary questions. Standardized long-context suites like LongBench (Bai et al., 2024) and LEval (An et al., 2023) show that models use a fraction of their window sizes while keeping hop depth fixed.

NovelHopQA fills this gap by simultaneously testing reasoning depth and long-context comprehension in coherent narratives.

Benchmark Features

- 4,000 multi-hop QA examples from 83 full-length public-domain novels

- Context windows ranging from 64k to 128k tokens

- Questions require integrating 1–4 reasoning hops across narrative chains

- Human validation ensures high alignment (>6.5/7) and hop-match accuracy (>94%)

Methodology

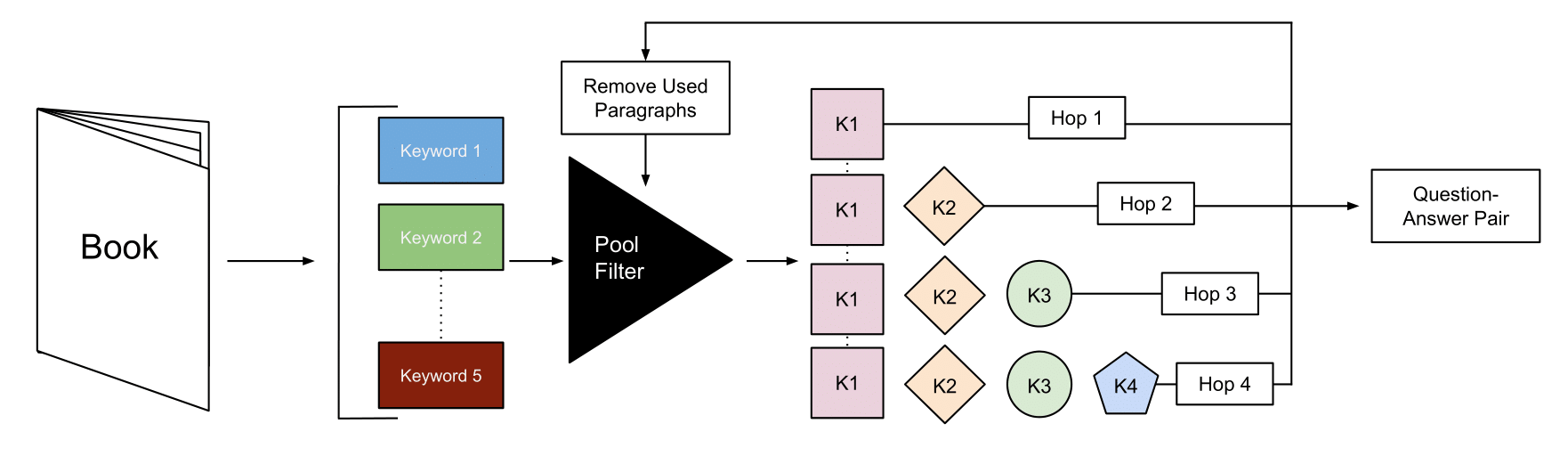

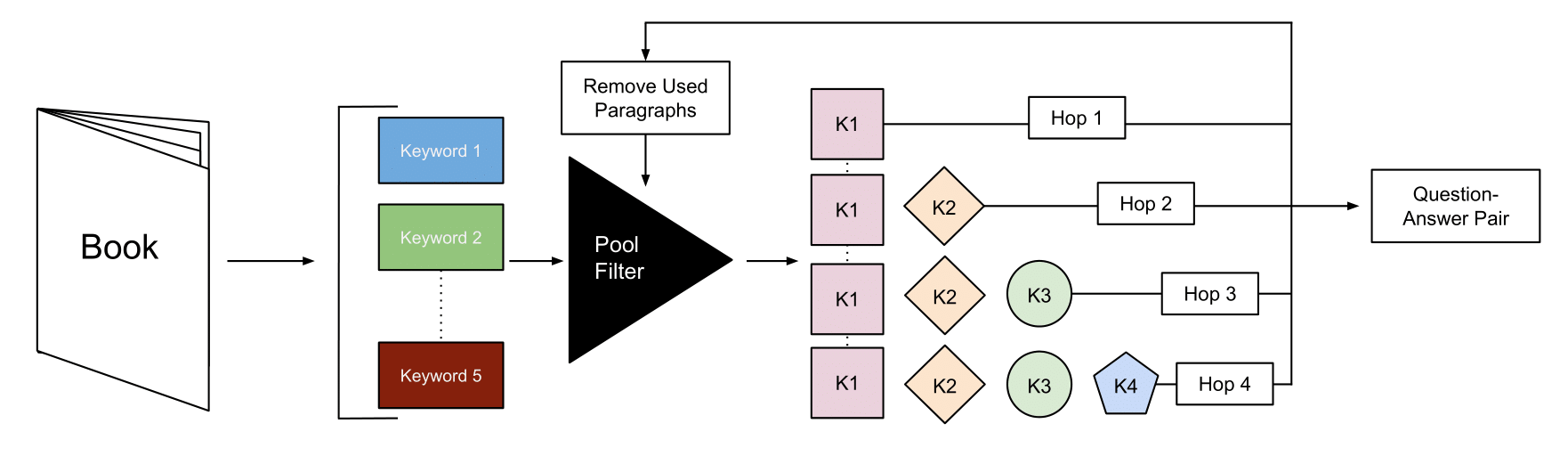

We build NovelHopQA through a four-stage pipeline:

- Novel Selection: We selected 83 English novels from Project Gutenberg, spanning mystery, adventure, romance, and literary classics, including both first- and third-person narration.

- Anchor-Keyword Discovery: For each novel, we prompted GPT-4o-mini to suggest five "anchor" keywords—characters, locations, or objects central to the plot. If any keyword appears fewer than 50 times in the text, we discard and re-sample.

- Paragraph Chaining & QA Generation: We implemented a keyword-guided process that:

- Selects paragraphs containing specific keywords

- Extracts new related keywords for subsequent hops

- Chains paragraphs with increasing hop depth (1-4)

- Regenerates QA pairs at each step to integrate new evidence

- QA Validation: We filter examples using model and human validation to ensure answerability and correct hop depth. Ten human annotators confirmed high alignment (>6.5/7) and hop-match accuracy (>94%).

Results

We evaluated six state-of-the-art models: o1, GPT-4o, GPT-4o-mini, Gemini 2.5 Pro, Gemini 2.0 Flash, and Gemini 2.0 Flash Lite. Key findings:

- Impact of hop depth: All models exhibit consistent performance degradation as hop depth increases. On average, accuracy drops roughly 12 points from 1-hop to 4-hop at 64k context length.

- Impact of context length: Longer contexts also lead to reduced accuracy, though the effect is milder than that of hop count. Across models, 1-hop performance drops about 5 points when moving from 64k to 128k contexts.

- No model maintains strong performance on the hardest tasks (4-hop at 128k), where even top models dip below 80% accuracy.

NovelHopQA Team

NovelHopQA is developed by researchers at Algoverse AI Research:

Contact

For questions about the benchmark, collaboration opportunities, or to report issues, please contact us at:

abhaygupta1266@gmail.com

We welcome contributions and feedback from the research community to help improve model fairness across diverse English dialects.

Citation

@misc{gupta2025novelhopqadiagnosingmultihopreasoning,

title={NovelHopQA: Diagnosing Multi-Hop Reasoning Failures in Long Narrative Contexts},

author={Abhay Gupta and Michael Lu and Kevin Zhu and Sean O'Brien and Vasu Sharma},

year={2025},

eprint={2506.02000},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2506.02000}

}

This table reports the accuracy (%) of six language models on the NovelHopQA benchmark, grouped by context length (64k, 96k, 128k tokens) and reasoning hop count (1–4). For each group, the highest model accuracy is bolded. Results show that all models experience accuracy drops as context length and hop count increase, revealing the challenge of multi-hop reasoning over long narratives.

| Context | Hop | 🥇 Gemini 2.5 P | 🥈 o1 | 🥉 4o | Gemini 2.0 F | Gemini 2.0 FL | 4o-mini | Avg. |

|---|---|---|---|---|---|---|---|---|

| 64k | 1 | 92.34 | 92.51 | 90.12 | 87.37 | 82.53 | 75.49 | 86.73 |

| 2 | 87.84 | 87.66 | 84.25 | 77.02 | 71.39 | 74.77 | 80.48 | |

| 3 | 85.12 | 84.99 | 81.34 | 74.25 | 70.05 | 73.14 | 78.13 | |

| 4 | 82.45 | 82.15 | 78.47 | 71.76 | 65.33 | 68.04 | 74.69 | |

| 96k | 1 | 90.12 | 90.35 | 88.83 | 82.26 | 78.44 | 72.25 | 83.71 |

| 2 | 86.03 | 85.88 | 82.67 | 74.02 | 67.04 | 67.44 | 77.18 | |

| 3 | 83.71 | 83.41 | 80.41 | 73.38 | 66.05 | 66.97 | 75.66 | |

| 4 | 80.98 | 80.68 | 76.92 | 70.26 | 62.81 | 65.59 | 72.87 | |

| 128k | 1 | 89.10 | 88.76 | 86.95 | 81.77 | 75.31 | 70.03 | 81.99 |

| 2 | 84.70 | 84.33 | 80.52 | 69.13 | 62.21 | 63.95 | 74.14 | |

| 3 | 82.20 | 81.92 | 78.03 | 68.78 | 62.07 | 62.95 | 72.66 | |

| 4 | 78.55 | 78.80 | 74.64 | 67.32 | 57.39 | 61.18 | 69.65 |

Performance Visualizations

The following graphs visualize model performance across different hop depths and context lengths, clearly showing the consistent accuracy drops as both factors increase.

Model Performance Across Context Lengths

These graphs show how model accuracy changes with increasing context length for each hop level, highlighting the combined challenge of long contexts and multi-hop reasoning.

NovelHopQA: Diagnosing Multi-Hop Reasoning Failures in Long Narrative Contexts

Paper Overview

NovelHopQA is the first benchmark to jointly vary both hop depth (1–4 hops) and context length (64k–128k tokens) in natural narrative settings. Current large language models (LLMs) struggle to answer questions that span tens of thousands of tokens, especially when multi-hop reasoning is involved. Prior benchmarks explore long-context comprehension or multi-hop reasoning in isolation, but none jointly vary context length and reasoning depth in natural narrative settings. When crucial evidence is buried in the middle of a long context, accuracy can plunge by more than 20 points (Liu et al., 2023). Even frontier models score below 50% exact match on multi-document suites, showing that larger context windows alone cannot solve cross-document reasoning.

Related Work

NovelHopQA builds upon and extends several foundational directions in long-context and multi-hop question answering:

- WikiHop & HotpotQA: Early Multi-Hop Reasoning (Welbl et al., 2018; Yang et al., 2018): Pioneered multi-hop QA over short Wikipedia passages, catalyzing advances in compositional reasoning but limited to short contexts.

- NarrativeQA, QuALITY, NovelQA, NoCha: Long-Context Comprehension (Kociský et al., 2017; Pang et al., 2022; Wang et al., 2024a; Karpinska et al., 2024): Benchmarks using book- or script-length narratives, focusing on single-hop or summary questions, with NovelQA and NoCha raising the ceiling to 200k tokens and including some multi-hop items.

- MuSiQue & BABILong: Synthetic Stress Tests (Trivedi et al., 2022; Kuratov et al., 2024): Introduce compositional and multi-hop questions using synthetic or stitched contexts, probing model brittleness at scale.

- LongBench, LEval, RULER, Marathon: Standardized Long-Context Evaluation (Bai et al., 2024; An et al., 2023; Hsieh et al., 2024; Zhang et al., 2024): Evaluate LLMs on tasks with large context windows, but typically keep reasoning depth fixed.

- FanOutQA & Loong: Multi-Document and Breadth-Oriented QA (Zhu et al., 2024; Wang et al., 2024b): Assess cross-document and multi-hop reasoning over multiple Wikipedia pages or diverse domains, with FanOutQA focusing on breadth and Loong on domain diversity.

- LooGLE & LV-Eval: Robustness and Length Control (Li et al., 2024a; Yuan et al., 2024): Control for training-data leakage, add misleading facts, and test robustness across length bands up to 256k tokens.

- Architectural Advances: Longformer, BigBird, Transformer-XL, LongRoPE (Beltagy et al., 2020; Zaheer et al., 2021; Dai et al., 2019; Ding et al., 2024): Enable LLMs to process tens or hundreds of thousands of tokens using sparse attention, recurrence, and advanced positional encodings.

- Retrieval-Augmented and Memory Approaches (Lewis et al., 2021; Wu et al., 2022; Xu et al., 2024; Li et al., 2024c): Improve long-context QA by combining retrieval and external memory with LLMs, outperforming context-only baselines at scale.

- Failure Mode Analyses: Lost in the Middle, NeedleBench (Liu et al., 2023a,b; Li et al., 2024b): Reveal that even with large windows, models struggle with positional and retrieval brittleness, especially when evidence is scattered.

NovelHopQA is the first benchmark to jointly vary both context length and reasoning depth in natural narrative settings, providing a controlled diagnostic for multi-hop reasoning at scale.

Benchmark Features

| Feature | Details |

|---|---|

| Number of QA examples | 4,000 |

| Source novels | 83 (Project Gutenberg) |

| Context window sizes | 64k, 128k tokens |

| Reasoning hops | 1–4 |

| Human validation | >6.5/7 alignment, >94% hop-match accuracy |

Methodology Pipeline

Simplified Methodology

Technical Pipeline Details

For each book and hop depth H ∈ {1, 2, 3, 4}, we assemble contexts and QA pairs as follows:

- Hop 1: Select a paragraph containing one of the book’s anchor keywords k₁. Prompt GPT-4o to generate a single-hop QA pair (Q₁, A₁) from this paragraph.

- Hops h ∈ {2–H}:

- Extract a new keyword kh from the context Ch-1 using a related-keyword prompt.

- Sample a paragraph that contains both k₁ and kh, and append it to the growing context Ch = Ch-1 ∥ new-paragraph.

- Prompt GPT-4o to re-generate a single QA pair (Qh, Ah) over the full context Ch, ensuring the new QA integrates evidence from all h paragraphs.

- Paragraph Exclusivity: Remove each selected paragraph from the pool to prevent reuse. If no matching paragraph is found after seven attempts, abort the chain and restart with a fresh anchor.

This process matures each datapoint from (C₁, Q₁, A₁) through (CH, QH, AH), yielding coherent multi-hop QA examples grounded in authentic narrative context. Each 64k, 96k, or 128k window is sampled from a continuous span, with all hop paragraphs required to fall within it—ensuring the QA chain reflects a cohesive narrative flow.

Golden-Context Filtering: To ensure answerability, all six models are evaluated on the original golden contexts used to generate each QA pair. Any question missed by any model is discarded, resulting in a dataset where all retained QA pairs are answerable by current leading models. This step ensures high dataset validity; detailed results are provided in the research paper.

Irrelevant and No-Context Sanity Check: To confirm that questions require actual reasoning and are not solvable by recall alone, 800 QA pairs (100 per hop) are tested under irrelevant and no-context conditions. Models perform poorly in these settings, indicating that correct answers depend on contextual grounding rather than memorization. This strengthens the benchmark's focus on true reasoning. Detailed results are provided in the research paper.

Human Validation Results

The following table reports the average scores from 10 independent human annotators who evaluated the alignment and hop-match accuracy for each hop depth H ∈ {1, 2, 3, 4}. Alignment is rated on a 1–7 Likert scale, and Hop Match measures the percentage of questions judged to require exactly H reasoning steps. High average alignment (>6.5/7) and hop-match (>94%) indicate strong dataset quality and clear multi-hop structure. These results demonstrate that the questions are both contextually grounded and require the intended number of reasoning steps.

| Metric | H = 1 | H = 2 | H = 3 | H = 4 |

|---|---|---|---|---|

| Alignment (1–7) | 6.69 | 6.58 | 6.58 | 6.57 |

| Hop Match (%) | 95.9 | 94.9 | 94.9 | 95.2 |

Evaluation Results

We evaluated six state-of-the-art models: o1, GPT-4o, GPT-4o-mini, Gemini 2.5 Pro, Gemini 2.0 Flash, and Gemini 2.0 Flash Lite. Key findings:

- Impact of hop depth: All models exhibit consistent performance degradation as hop depth increases. On average, accuracy drops roughly 12 points from 1-hop to 4-hop at 64k context length.

- Impact of context length: Longer contexts also lead to reduced accuracy, though the effect is milder than that of hop count. Across models, 1-hop performance drops about 5 points when moving from 64k to 128k contexts.

- No model maintains strong performance on the hardest tasks (4-hop at 128k), where even top models dip below 80% accuracy.

These results highlight that simply increasing context window size isn't enough—robust multi-hop reasoning remains a key challenge for LLMs, even at the frontier of model capabilities.

.png)

.png)

.png)

.png)

.png)

.png)

.png)